Software

RobotX 2024 > Software

Overview

We built off of our previous competition lessons learned, pulling from RoboSub, RoboBoat, VRX, and previous RobotX code. Because our sensitive Electronics are in the port enclosure isolated from the noisy components found on the starboard enclosure our data was less impacted compared to previous years.

Perception

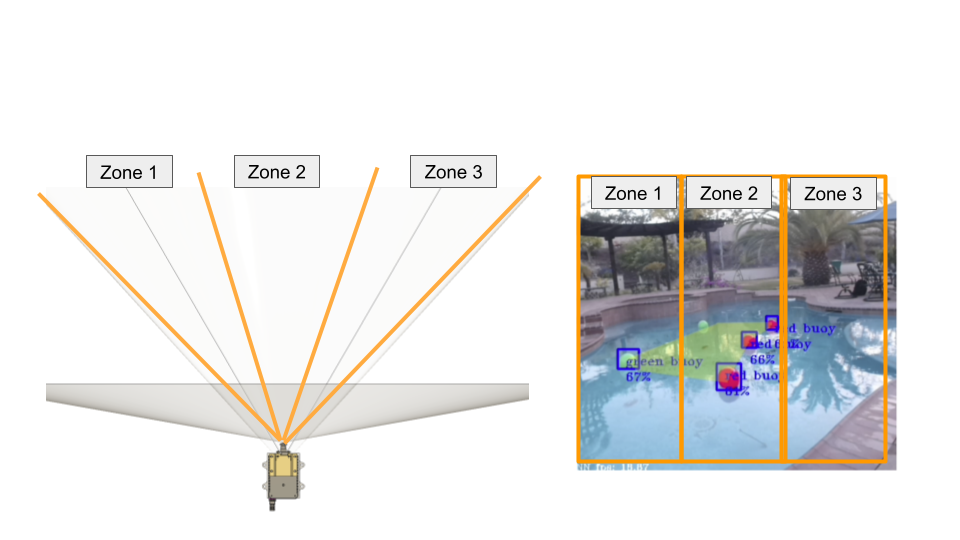

We use 3 cameras on the front of the vehicle, each with 120 deg field of view (FOV) at different angles from each other. This enables a complete 198.5 degree panoramic view of the front of the ASV since the FOVs overlap. Within the software stack, each camera can run a separate machine learning model and have its own computer vision layered on top of it (based on what the mission needs). Additionally, there is a LiDAR mounted under both the port and the starboard cameras. This arrangement of LiDARs also enables a full panoramic view of the front of the boat. To handle the heavy processing of the LiDARs, we built a C++ stack focused on linear matrix operations that both stitches the lidars, and also builds an occupancy grid of the environment. Instead of pure sensor fusion the camera will correlate the zones of the camera view with corresponding lidar regions.

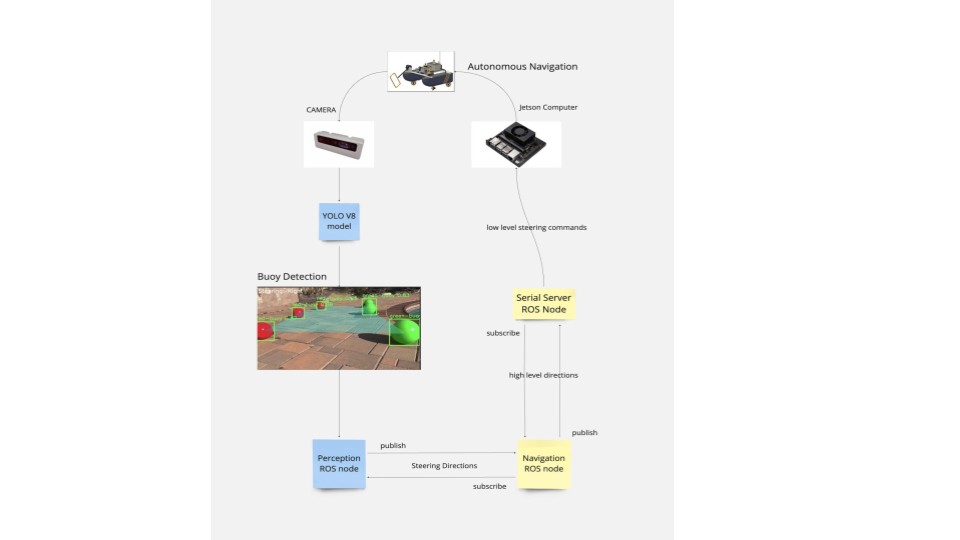

Guidance, Navigation, and Control CSE 145 SP 24

For our guidance, navigation, and control (GNC) system we had the Spring 2024 CSE 145 capstone student team prototype on our RoboBoat 2024 platform. For the computer vision portion of the GNC system, the input is the camera stream, and the output are bounding boxes detecting red and green buoys. The models we used to train the computer vision were YOLO v5 YOLO v8. For the path prediction algorithm, the program draws a polygon from the sorted buoy positions and memory of boat location. Midpoint calculations of path are done in comparison to horizontal center of frame. Steering commands are given based on threshold sensitivities for left/right.